If your website analytics look too good to be true, you might be dealing with more than just a surge in real visitors. Traffic bots—automated programs that mimic human browsing—are now responsible for over half of all web traffic, making it harder than ever to trust your data.

I've seen how these bots can quietly distort metrics, drain ad budgets, and even put businesses at risk of search penalties or downtime. But not all bots are out to cause harm; some play a legitimate role in testing and monitoring, which adds another layer of complexity.

In this article, I'll break down exactly how traffic bots work, how they differ from other automated tools, and why their impact matters for anyone running a website. You'll get practical tips for spotting suspicious activity, learn about the latest bot detection strategies, and see which tools can help protect your analytics and brand.

Whether you're a marketer, developer, or business owner, you'll walk away with clear steps to audit your site, filter out fake visits, and keep your data reliable—no matter how clever the bots become.

What Is a Traffic Bot?

Let’s break it down. A traffic bot is automated software that generates website visits, page views, ad clicks, or fake form submissions. The catch? Analytics platforms record these as real users.

What Is Bot Traffic? - SearchEnginesHub.com

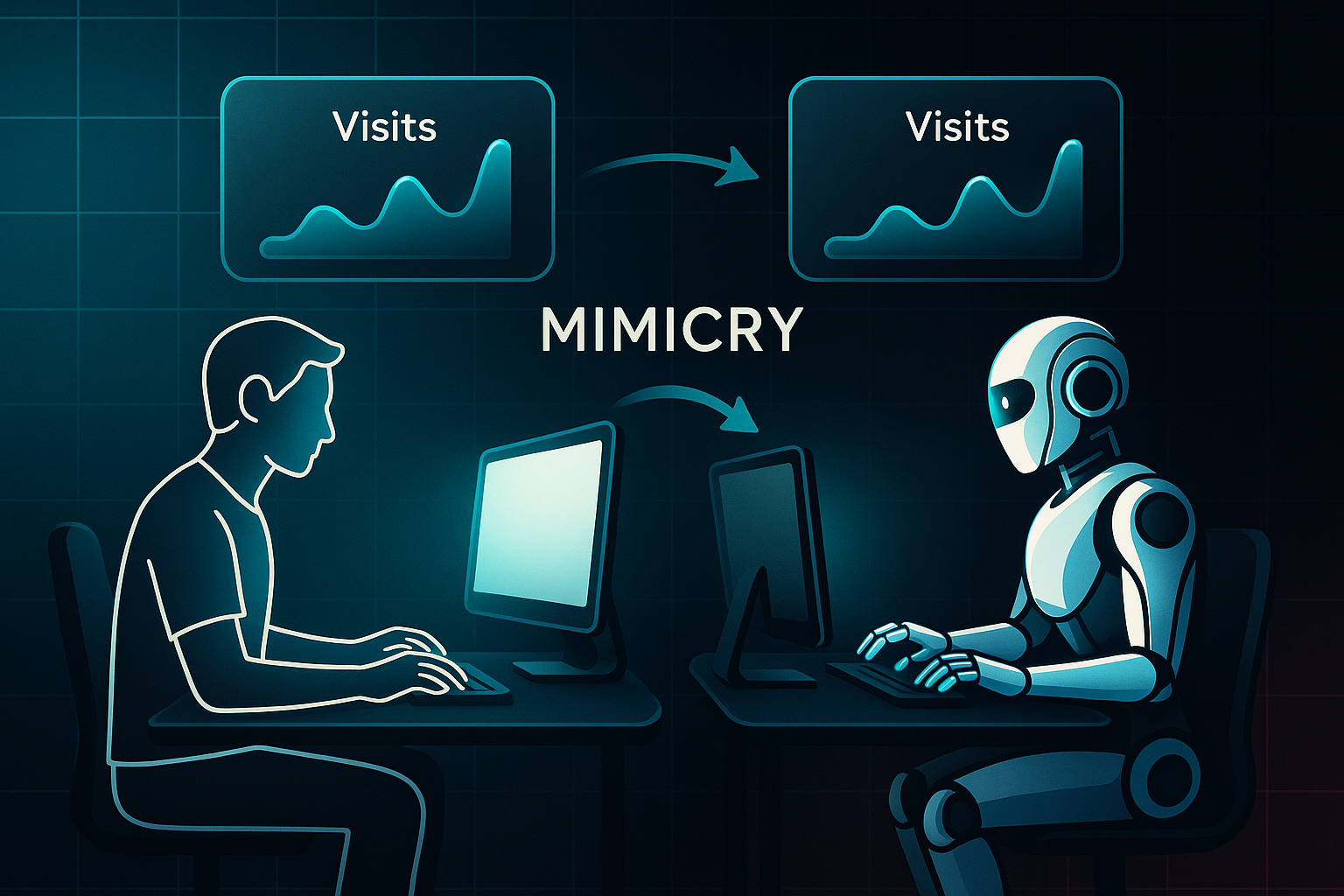

How do they manage this trick? Traffic bots mimic human behaviour—browsing, clicking ads, filling forms—yet no people are really involved. Some bots simply reload pages, but clever ones use headless browsers or AI to mirror real habits and dodge detection.

But not every traffic bot is a villain. Businesses sometimes use them to stress-test websites, monitor uptime, or gather SEO data.

This way, site owners spot issues before customers do.

Still, there’s a dark side. Many misuse traffic bots to skew website metrics, pull off ad fraud (think the infamous Methbot scam that cost millions), unfairly boost SEO rankings, or even overwhelm servers.

A study by CHEQ and economists at the University of Baltimore found that overall click fraud cost online retailers $3.8 billion by the end of 2020.

What do all these bots share? Automation and scale. Their goal is to create a buzz that’s only in the numbers—a site that feels busy, but isn’t.

Here’s a picture: a shop full of mannequins. From outside, it appears crowded, but inside, there’s nobody shopping. That’s how traffic bots trick site owners and marketers, conjuring an illusion of popularity.

How Traffic Bots Differ from Other Bots and Human-Farmed Traffic

- Traffic bot

Fakes visits or clicks, fully automated—Methbot is a well-known example. - Chatbot

Handles chat, not web visits—think ChatGPT, which talks but doesn’t click. - Click farm

Real people abroad, paid for fake visits or clicks. - Legitimate bot

Bots like Googlebot, openly indexing or monitoring, easy for analytics to filter.

Why Understanding Traffic Bots Is Important

Here’s a striking fact: by 2024, over half of all web traffic is set to come from bots.

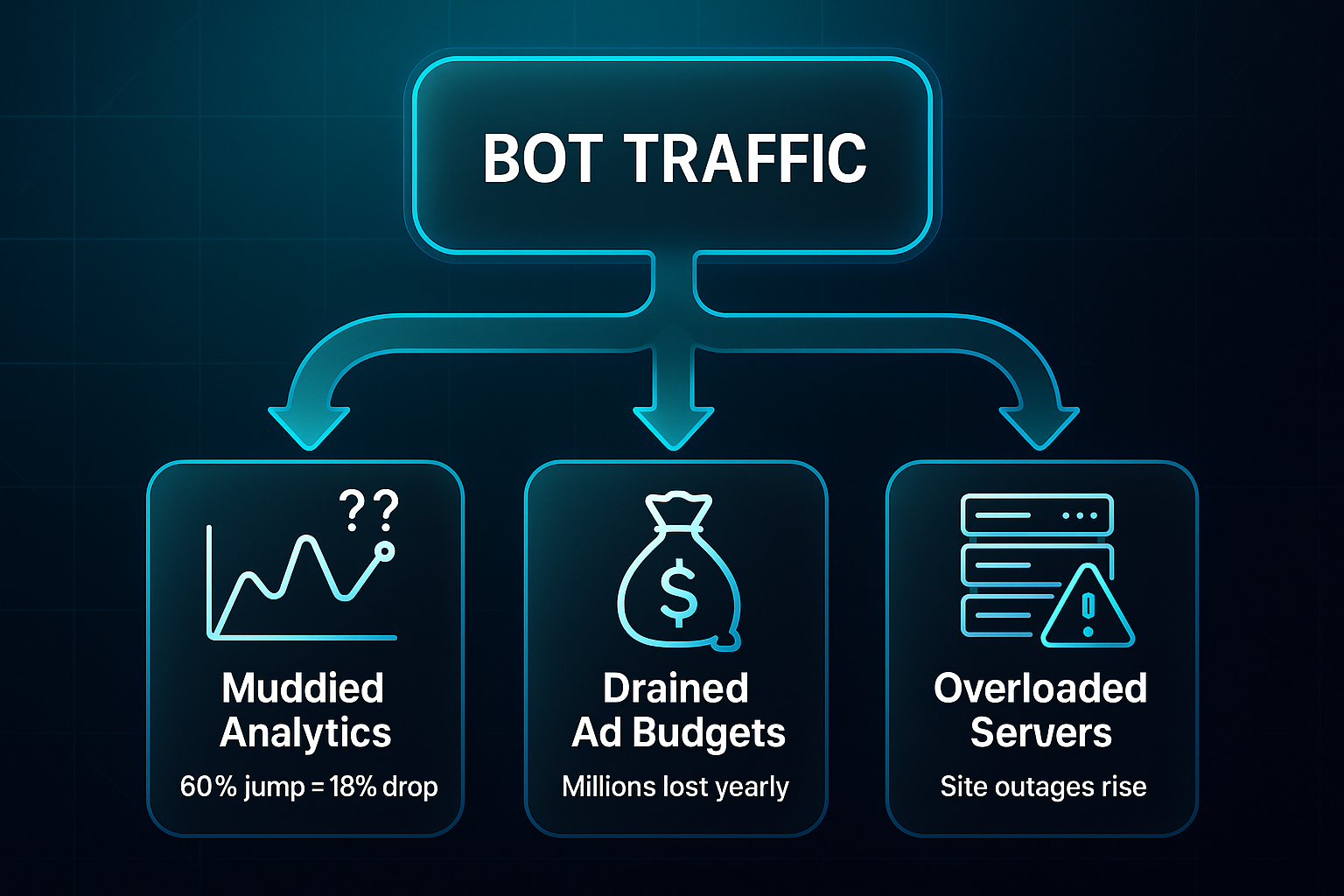

The danger? False visits muddy analytics, drain ad budgets, and overload servers. One European airline saw a 60% jump in fake visits, which triggered an 18% drop in bookings.

To trust your data and protect your brand, spotting traffic bots is absolutely essential.

Failing to filter out sophisticated bot traffic can severely skew analytics, leading to misguided marketing investments and a distorted understanding of true user engagement. This not only wastes budget but can also damage brand reputation by creating a false sense of reach.

How Do Traffic Bots Work?

Let’s get behind the scenes. How exactly do traffic bots make your website stats spike? The story is more clever than you might expect.

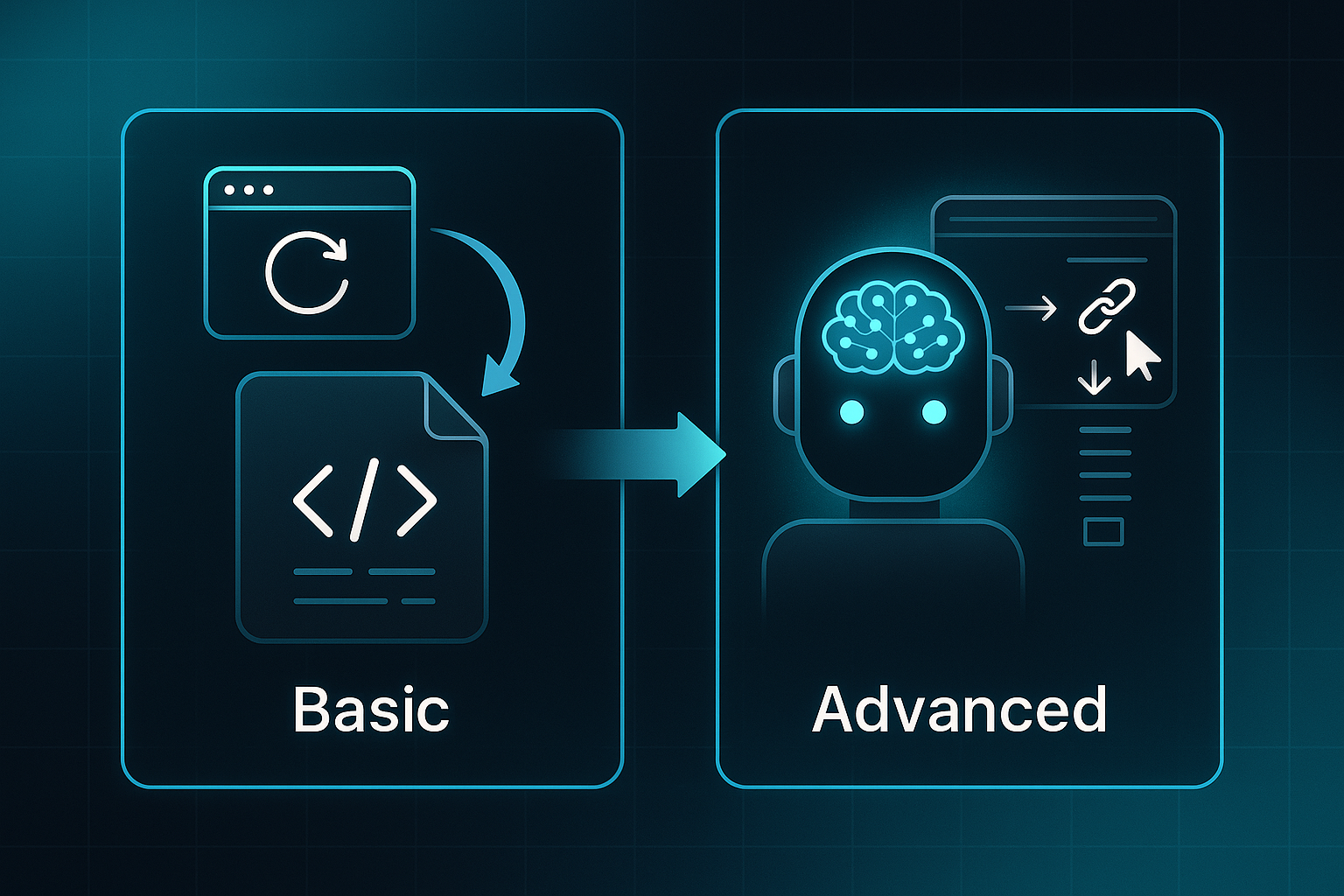

Basic Mechanisms: Imitation in Action

At their simplest, traffic bots pretend to be humans. Software reloads pages, clicks ads, and fills forms automatically, so analytics record busy activity without a single person involved.

Sometimes, it’s just a basic script refreshing. But other bots act more lifelike by using headless browsers—they’ll linger, follow links, or even move the mouse for added realism.

Sophistication: Dodging Detection

Okay, crude bots are easy to spot—strange click speeds, unnatural session lengths, obvious robotic patterns. To fight back, bot creators have evolved.

Modern bots use AI and machine learning to study and mimic human habits. They’ll switch up browser types, rotate through thousands of global IP addresses, and even reproduce mouse movement or scrolling. Detection becomes an uphill battle.

The rise of accessible AI is supercharging the bad bot threat, enabling automated attacks that are more sophisticated and harder to detect than ever before.

The smartest bots use VPNs and proxies to hide their tracks. Headless Chrome and other stealthy technologies help them blend in—making a bot session look just like a real visit.

Scaling: The Botnet Phenomenon

Ready for the big leagues? Sophisticated operators harness vast botnets—networks of compromised computers worldwide. These botnets bombard sites with massive surges of fake visits, all appearing organic.

Take Methbot. It manipulated hundreds of thousands of IPs, faked video ad views, and stole millions before eventually being revealed.

Staying Ahead: Trends and Tactics

Bots aren’t staying still. Developers adapt at lightning speed. As detection grows smarter, bots reinvent themselves. Last year, bots made up almost 53% of all web activity—a staggering share.

Key Traffic Bot Technologies

- Headless Browsers

Invisible browser engines replay real user actions for deceptive visits. - Cloud Servers

Let bots run from diverse regions at big scale. - Proxy Networks

Hide bot origins and mimic distributed traffic. - AI Algorithms

Learn browsing and click patterns to blend in. - Botnet Control Panels

Orchestrate massive surges from countless compromised devices.

It’s a never-ending duel—the more “real” bots become, the harder they are to catch. That’s the challenge for today’s defenders, and it isn’t slowing down.

AI-Powered

SEO Content Strategy

See the AI platform that's replacing entire content teams

(with better results).

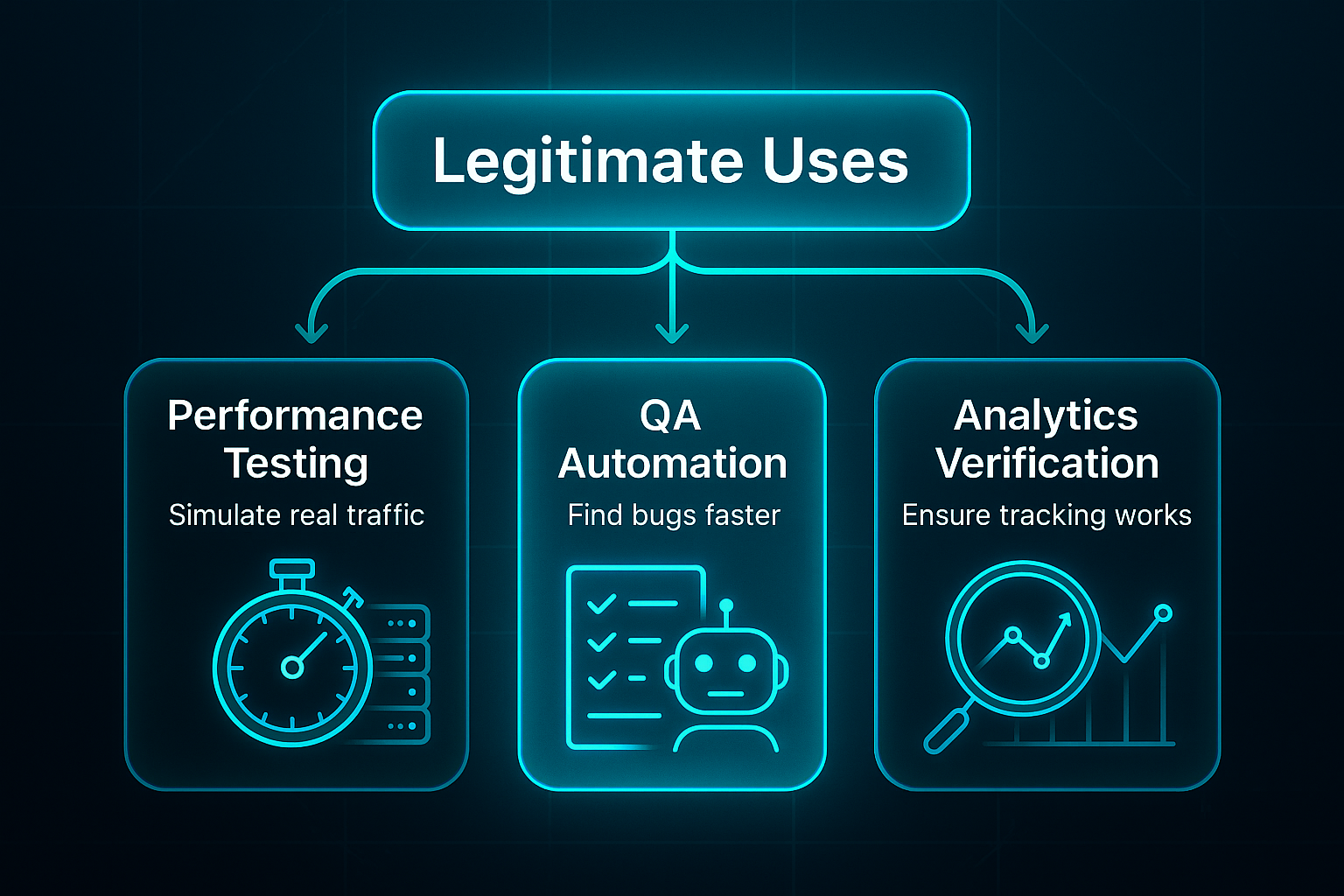

Legitimate Uses of Traffic Bots

Business and Testing Applications

It might sound surprising, but traffic bots aren’t always working against you. In fact, they’re crucial when businesses run performance and load testing. Before a big launch, bots simulate crowds of visitors so IT teams know if their servers can handle real demand.

QA teams use them to streamline testing, too. Rather than clicking endlessly, bots run through typical user journeys—logging in, checking out, submitting forms—finding bugs efficiently before customers ever encounter them.

Bots also shine in analytics verification. By repeating real actions like form submissions or purchases, they ensure tracking works perfectly so campaigns get off on the right foot.

SEO and Analytics Monitoring Tools

SEO specialists put bots to work tracking keyword rankings, running searches from multiple devices or locations. This quickly surfaces ranking changes after algorithm updates.

SEO Keyword Ranking Monitoring with SerpRobot

They’ll also simulate conversions to confirm analytics tags and pixels work, especially before major site migrations. By testing various paths, they help digital teams guarantee reporting accuracy, no matter the source or device.

Best Practices to Prevent Legitimate Bots from Corrupting Analytics

Here’s the trick: IP allow-listing makes sure test bots don’t tangle with real analytics. Tag and label all bot sessions within your analytics platform.

Finally, filtered views for internal testing keep your decisions based on genuine visitor data—not the dry runs behind the scenes.

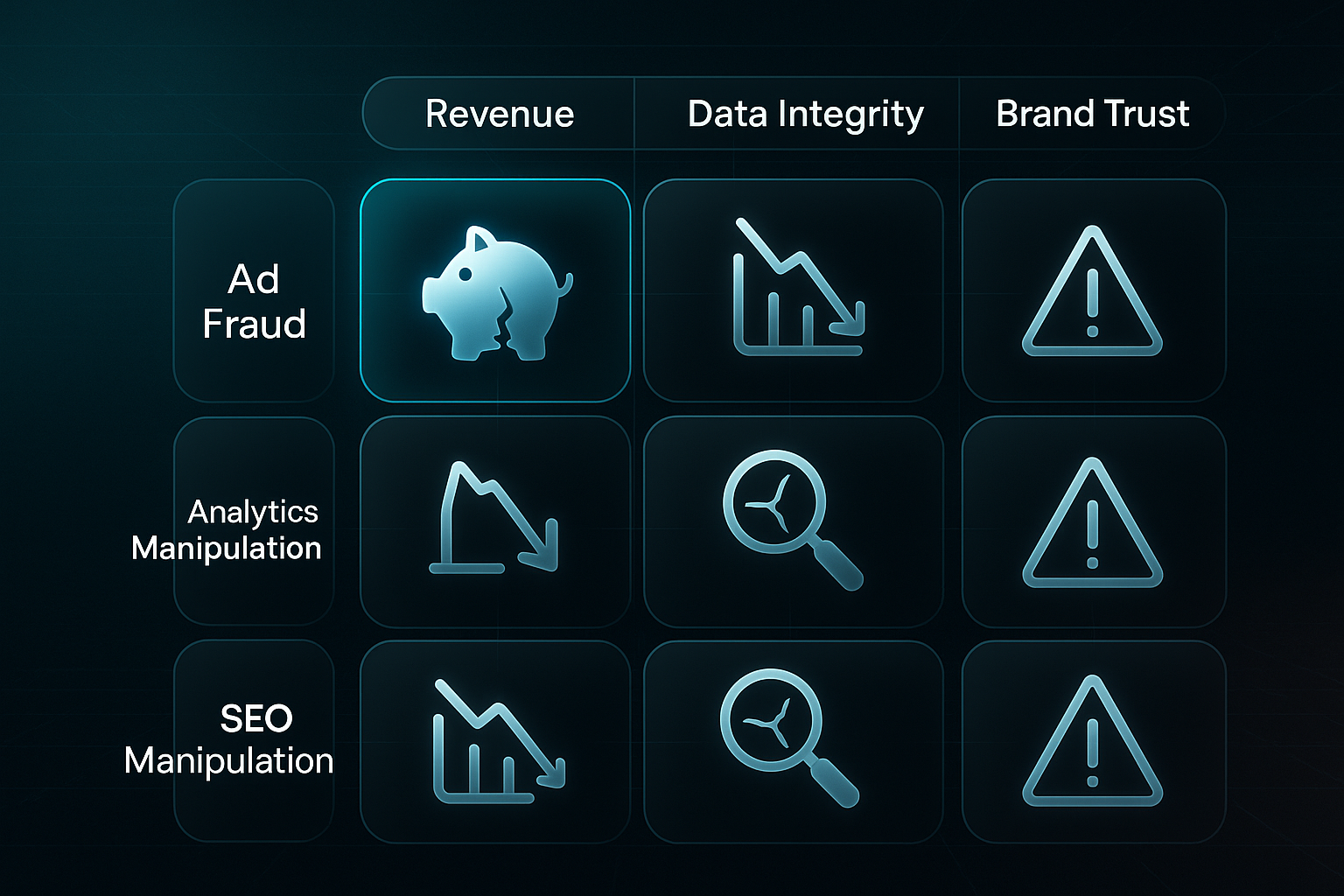

Malicious and Abusive Uses of Traffic Bots

Traffic bots aren’t just up to a bit of mischief—they’ve grown into complex tools for disruption and fraud. With AI now part of the arsenal, businesses face a relentless battle to keep ahead.

Key Malicious Uses and Threats

So how do these bots create chaos online? Well, at their worst, traffic bots orchestrate scams that drain budgets and undermine entire campaigns.

- Ad fraud

Bots copy real browsing habits and rotate IPs, clicking ads to steal advertising money. - Analytics manipulation

Bots inflate activity or fake conversions, distorting web metrics and derailing marketing strategy. - SEO manipulation

They scrape content, spam rivals, or automate shady link-building — all of which risks penalising sites. - DDoS attacks via botnets

Huge networks of bots overwhelm sites or APIs, causing outages and turmoil. - AI-driven activity

Modern bots use AI to evolve attacks rapidly, producing fake reviews or launching credential theft at scale.

What kind of damage are we talking about, really? Here’s how these threats hit businesses where it hurts.

Impact of These Threats on Businesses

Malicious bots eat away at a company’s digital foundation. The aftermath? Lost revenue, damaged trust, and some serious clean-up.

- Resource strain

Defending against attackers means extra server power, tighter defences, and non-stop monitoring. - Lost ad spend

Fake clicks erode budgets, leaving genuine campaigns with skewed results and poor returns. - Brand and trust erosion

Service disruptions and fake reviews wreck customer loyalty and can invite public criticism. - SEO penalties

Sites hit by bots might see search demotion or blacklisting, which demands lengthy repairs. - Disrupted operations

Attacks cause downtime and pull teams away from their work, harming productivity.

Recent Trends in Malicious Bot Traffic

The weird part is that today’s malicious bots can look and act almost exactly like genuine users. They target APIs and are now available as Bots-as-a-Service on demand. The rise of AI means attackers adapt frighteningly fast, turning bot defence into a bigger challenge every year—even for the tech-savvy.

For the first time in a decade, automated bot traffic has surpassed human visits, now constituting 51% of all web activity, primarily driven by a surge in bots powered by accessible artificial intelligence.

The Impact of Traffic Bots on Websites and Online Businesses

Distorted Analytics and Marketing Data

Bots drive as much as 51% of website visits in 2024, reaching 59% in travel and gaming.

When so many visits aren’t real, analytics tell a skewed story. User numbers and engagement look higher than they are.

That makes A/B testing less trustworthy—and leaves business decisions on shaky ground.

Wasted Ad Budgets and Reduced ROI

Bots chew through ad budgets with fake clicks and impressions. Each year, over $71 billion disappears due to invalid traffic, with some platforms hitting 25% bot rates.

This leads to wasted time chasing unreal leads, especially in retail, travel, finance, and B2B.

SEO Risks and Search Engine Penalties

Bots faking visits and links put sites at risk of search engine penalties, including demotion or even deindexing.

Millions of domains lose over 50% organic traffic in severe cases. Recovering from this can take many months—even a brief bot spike can leave damage.

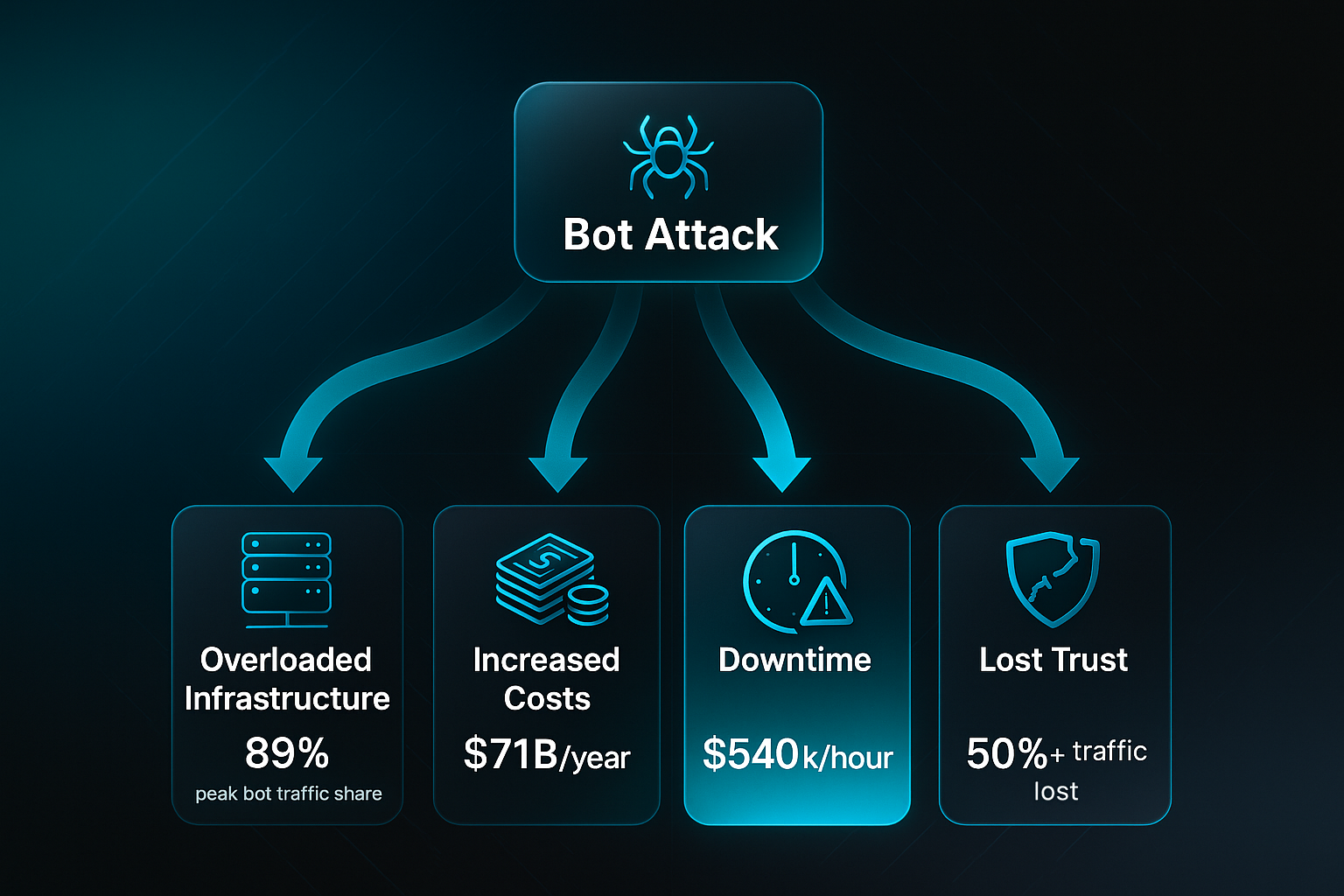

Resource, Security, and Trust Fallout

Bots overload infrastructure, increase costs, and cause downtime. During major attacks, bots can make up 89% of traffic, with some organisations losing a third of their server capacity.

Downtime costs of up to $540,000 per hour hit hard. Performance drops and security issues push customers away and harm reputation, especially where trust means everything.

Blog-in-one-minute

Add a fully SEO-optimised blog to your website with just 2 lines of code.

Learn more

Key Impact Metrics

- Bots’ share of site traffic

Up to 51% in 2024; key sectors 42–59%. - Annual ad losses

Up to $186 billion globally; $71 billion from fake ad activity. - SEO penalties

Millions of domains see 50%+ organic loss in severe cases. - Infrastructure burden

One-third server capacity lost to bots; downtime up to $540,000 per hour. - Security escalation

DDoS attacks up 90% year on year; 44% now target APIs.

How to Detect Traffic Bots

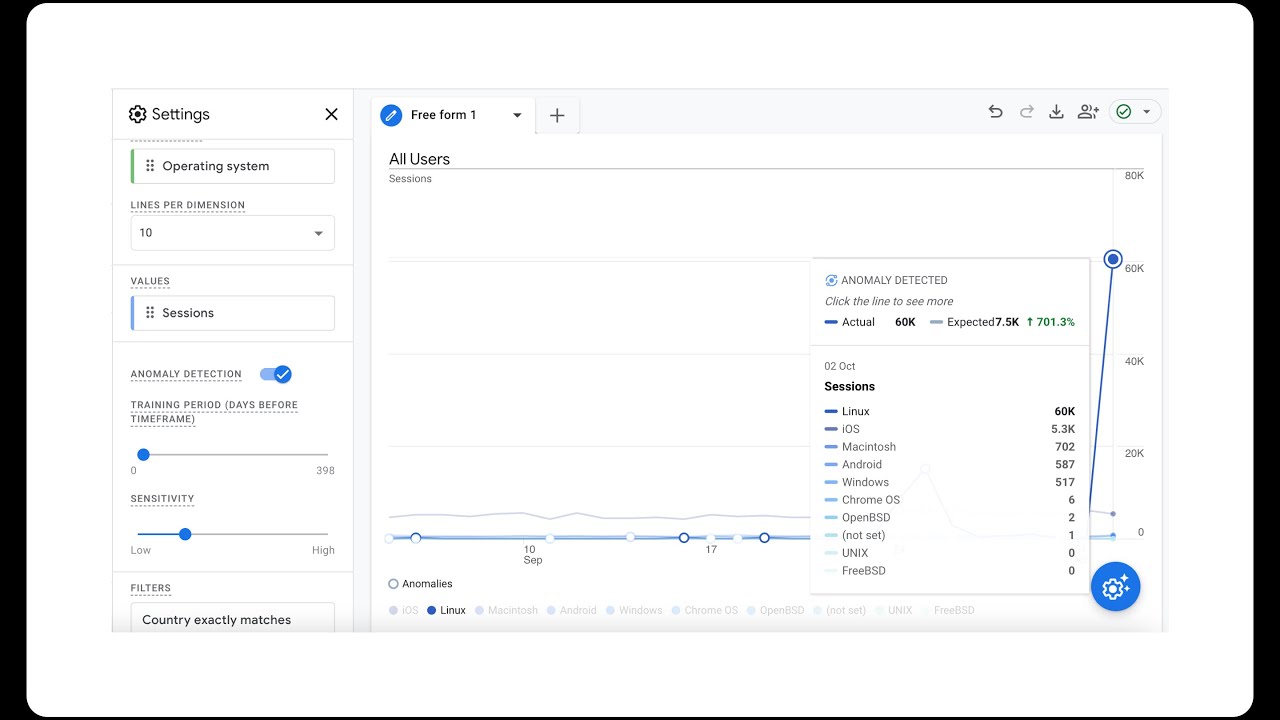

Spotting traffic bots is more of an art than a mystery. Analytics dashboards—like Google Analytics, Adobe Analytics, or Matomo—are usually the first place clues emerge. These platforms log every visit, and odd patterns often reveal themselves, even if you’re not a technical expert.

Detecting Bot Traffic in Google Analytics 4

Detecting Bot Activity in Analytics Platforms

Typically, bot traffic stands out with strange, unexpected patterns in your data.

So, what should make you take a second look?

- Unexplained traffic spikes

Sudden surges in visitors that aren’t linked to campaigns or seasonal trends often signal a bot swarm. - Irregular bounce rates

Very high or low bounce rates—near 100% or under 10%—often signal automation at work. - Geographic anomalies

Spikes from countries or regions you’d never expect in your customer base. - Unusual devices and user-agents

Sessions using odd browsers, rare devices, or strange user-agent strings that real users wouldn’t have. - Suspicious user behaviour

Rapid-fire page views, the same path repeated, or session durations far outside the usual.

Digging into analytics deeper sometimes brings up even stranger clues. Low engagement from unlikely places or hundreds of ultra-short visits from the same spot is a definite red flag.

Most analytics platforms offer built-in bot filtering. Features like “Exclude all hits from known bots and spiders”, or advanced segmentation, help you slice data by source, device, or behaviour and focus on what’s odd.

Extra support comes from tools like SpamGuard or ClickCease, using blacklists and behavioural models. Just keep subscription costs and possible false positives in mind.

If patterns look off, segment those sessions, document what you find, and export the evidence for technical checks. With the right process, catching bad bots feels much less daunting.

How to Prevent or Mitigate Malicious Bot Traffic

Spotting bots is just the start. Actually stopping them means building layers of defence—like a security guard, bolted doors, and an alarm all working together.

Core Technical Prevention Strategies

Any website, whether large or small, should start here to keep out most intruders.

- Web Application Firewalls (WAFs)

Block suspicious activity as it comes in. Stay on top of rule updates and activity logs—bots evolve fast. - CAPTCHAs

Add challenges on registrations and forms. Adaptive CAPTCHAs foil bots without frustrating real users at every turn. - Rate Limiting

Set request limits per IP or user. Flexible thresholds stop bot swarms but let genuine visitors through. - IP Blacklists

Block bad actors using threat feeds. Update frequently—malicious addresses shift constantly.

Advanced Mitigation and Monitoring Methods

If bot attacks persist, it’s time for smarter tools.

- Bot Management Platforms

Rely on machine learning and behaviour analysis to spot sneaky bots. Dashboards help you respond in real time. - Device Fingerprinting

Track unique devices, not just IPs, exposing bots switching addresses. - Behavioural Biometrics

Detect bots through awkward mouse moves or unnatural typing—real human quirks are hard to fake. - Honeypots/Decoys

Plant invisible traps just for bots, then block those caught. - DevOps Integration

Automate new bot rules and quick response within your workflows.

Separating Legitimate and Unwanted Bot Activity

You want to block malicious bots—without losing the useful ones.

- Explicit Tagging

Assign trusted bots unique IDs or IPs, then filter in analytics. - Whitelisting/Blacklisting

Maintain and review lists of allowed and blocked bots, watching user agents closely. - Behavioural/Demographic Filters

Flag unusual usage or locations for deeper investigation. - Continuous Review

Refresh defences regularly, as bot tactics always change.

The Future of Traffic Bots and Detection

AI-Driven Bots: Realism and New Threat Vectors

Ever wondered how traffic bots keep getting smarter? Artificial intelligence is giving them a real edge—they can now mimic the smallest human details. We’re not just talking about random clicks; these bots scroll convincingly, move the mouse in odd patterns, and time their actions to look just like genuine users. The sneaky part? Their behaviour adapts in real time, so old-school defences quickly fall behind.

Here's the thing: botnets-as-a-service are making sophisticated attacks accessible to anyone, not just tech whizzes. If you want millions of fake visits, you just rent them. Take ByteSpider—back in 2023, it used global IP addresses to launch a tidal wave of fake requests against travel and retail sites.

And when these bots get blocked? Thanks to adversarial AI, they actually learn from it. They adjust their digital fingerprints, tweak timings, even switch browser details—returning with a fresh disguise after every failed attempt. That makes them much harder to catch.

High-traffic zones like e-commerce, travel bookings, and event ticket sites—anywhere transactions matter—are especially vulnerable. In 2024, industries like these saw a 165% jump in targeted, AI-powered bot attacks. It's a rapid escalation, and honestly, that's putting real pressure on businesses to step up their defences.

Legal and Ethical Considerations of Traffic Bot Use

Legal Risks and Compliance Requirements

Using traffic bots isn’t just a technical shortcut—it’s a legal risk. Faking visits can break the UK CAP Code or FTC rules, exposing you to fines or account suspensions.

Major platforms like Google, Meta, and LinkedIn strictly ban bots, and will pursue action—including legal—against unauthorised automation. Under GDPR or the UK’s DPA 2018, you must be transparent if bots process personal data or influence profiling.

Global companies contend with shifting requirements as disclosure and compliance rules vary across the UK, EU, US, and APAC.

And this year, enforcement is up—sectors like travel and telecom are regularly seeing more suspensions, fines, and lawsuits tied to artificial traffic.

Ethical Boundaries and Best Practices

Never use bots to mislead or distort analytics. Be transparent, label test traffic, and always respect platform rules and robots.txt.

Slip up, and both your reputation and finances are at risk. Auditing and clear records protect you.

Solutions and Tools for Managing Bot Traffic and Protecting Analytics

Enterprise Bot Detection and Mitigation Tools

Let’s be honest—the bots just keep getting smarter. Organisations now lean on advanced bot management solutions to safeguard analytics and business operations. 75% of e-commerce and finance companies have adopted these defences to keep up as threats escalate.

Source: cloudflare.com

Today’s leading platforms use AI-driven analysis, clever behavioural profiling, and deep data to catch ever-evolving bots. The goal? Block attackers while keeping things seamless for genuine users.

Source: imperva.com

Source: humansecurity.com

Source: datadome.co

Here are the leaders you’ll see in this space:

- Cloudflare Bot Management

Uses AI modelling and Bot Fight Mode. Retail and gaming clients see 40–60% fewer suspicious visits after setup. - DataDome

Hybrid AI, fast dashboards, and instant mitigation keep performance high. E-commerce and fintech brands get sub-millisecond bot detection. - HUMAN (PerimeterX)

Strong behavioural analytics and flexible APIs. The Human Challenge feature means a better user experience and 50% fewer false positives. - Imperva Advanced Bot Protection

Deep analytics with machine learning. It integrates easily and tracks global threats for major brands.

Real-world results prove the value—a leading fintech stopped 63% of fake account attempts with HUMAN, and a major e-tailer cut inventory abuse using Imperva.

Striking the right balance is crucial. Too strict, and you risk false positives that push away real customers. Adaptive controls and regular tuning keep your site safe, but still welcoming.

Practical Checklist: How to Audit and Protect Your Website from Traffic Bots

Step-by-step Audit Actions and Prevention Priorities

Ready for clean, trustworthy data? Here’s a checklist to keep traffic bots at bay and protect your analytics.

- Review analytics weekly

Scan for traffic spikes, odd countries, or strange engagement. Record anything that stands out. - Enable bot filters

Turn on “Exclude all hits from known bots” and set filters for test or suspicious visits. - Tag internal/test visits

Use GA4 Internal Traffic or similar features to exclude staff, VPN, and testing. - Set automated alerts

Configure alerts for unexpected visits or activity. Adjust thresholds to match your traffic. - Layer security controls

Put in WAFs, CAPTCHAs on forms, and honeypots so bots get blocked. - Update defences monthly

Refresh WAF rules, blacklists, and analytics segments. Log updates for visibility. - Automate escalation

Ensure alerts instantly notify your team if suspicious bot behaviour appears. - Consider managed solutions

High-traffic sites can rely on SEOSwarm or Cloudflare for robust bot and analytics protection. - Repeat regularly

Follow this list each month—more often for busy periods—to stay ahead.

If you stick to this, only real users will drive your decisions. Isn’t that the goal?

Recommended Resources for Further Learning on Traffic Bots and Website Analytics

Where to Learn More

Want to boost your analytics skills or stay ahead of traffic bots? The right resources keep you sharp, whether you’re a beginner or already experienced.

- Technical Vendor Documentation

Akamai Bot Manager, DataDome, and Human Security Portal share practical bot detection guides, tips, and real-world updates. - Industry Reports and Benchmarks

Forrester, G2, and the Thales Bad Bot Report deliver trusted peer reviews, emerging trends, and up-to-date statistics. - Compliance and Regulatory Standards

GDPR, CCPA, and vendor libraries help you stay legal with clear analytics and bot management advice. - Security and Analytics Blogs

Akamai, Human Security, Feedzai, and Fingerprint reveal fresh threats, hands-on detection, and real case studies.

For a solid start, check vendor glossaries or Human Security learning paths. Then, review annual reports monthly to keep your site’s bot defences up to date.

Spotting Traffic Bots in Your Analytics

Most analytics tell a story, but traffic bots can turn that story into pure fiction. If you’re making decisions based on inflated numbers, you’re not just wasting budget—you’re risking your brand’s future.

I recommend you start with a weekly review of your analytics, looking for odd spikes, strange geographies, or unnatural engagement. Enable bot filters, tag internal visits, and layer in security tools like WAFs and CAPTCHAs. For high-traffic sites, consider managed bot mitigation platforms—don’t wait for a crisis to act.

The truth is, bot threats will only get smarter. Your defences should evolve just as quickly. In the end, the only numbers that matter are the ones you can trust.

- Wil